Inspiration for project: The Binding of Isaac Rebirth

The Binding of Isaac is a 'Roguelike' genre of video game, developed primarily by Edmund McMillen. The game initially released to Windows machines as a PC only release in September 2011, but was later ported to other operating systems: Mac and Linux. The video game is 2D, and involves a playable character in a procedurally generated dungeon, where the user must defeat enemies and bosses for each level of the game whilst acquiring power-ups across the way. It is worthwhile to note that - as the levels of the game progress - the game becomes more challenging, with difficulties of enemies and bosses increasing the further the player gets.

An article (McHugh, 2018) describes 'Roguelike' by stating that "A roguelike means simply that it shares some of the fundamental features of the original Rogue. Permadeath, procedural generation, and being turnbased are often the core tenets of what makes up a roguelike." McHugh is referring to the video game 'Rogue' (1980), a game which was popular for its procedurally generated game world. It also featured some interesting game mechanics, such as 'Permadeath', which means that if the player dies in the game, the game is reset and all the progress is lost. Due to the game being procedurally generated, each death/completion would lead to a unique experience each time.

The video game 'The Binding of Isaac' shares some of these core mechanics, as it too is procedurally generated, and also incorporates the 'Permadeath' mechanic as well. Though the game does differ from 'Rogue', as the game does not involve a turn-based mechanic, which is a less common mechanic among popular Roguelike genres of video game. Some of these examples are: Spelunky, Crypt of the Necrodancer, Faster Than Light

I have played significant time of The Binding of Isaac, and personally I really enjoy the game mechanics of Permadeath and Procedurally generated dungeons, as it provides a unique experience each time. The Binding of Isaac procedurally generates the enemies, the boss of the level, the type of level, the power-ups available as well as the playable character as well. Moreover, the Permadeath serves as a progression mechanic, as you are able to unlock new power-ups and levels in the game upon completing (or sometimes dying) the game, giving more reason to play it again.This adds a huge layer of depth to the replayability of the game, which is the main reason I have been able to invest a significant portion of play-time into this video game. This element of replayability is something I really want to focus on for my video game that I will be prototyping for this assignment.

Initial idea for project:

For my game, I'd like to create a third-person roguelike genre of game, which involves the player progressing though the game world until the designated ending point. Although, the ending point would be a 'checkpoint', where the player would play again to unlock the next 'checkpoint'. The idea of this is to give the game much more replayability, with elements of randomised elements such as enemies or items.

Considering the story of the game, the playable character would be a skeleton who wishes to be a real human and not just bones. Therefore, the character explores the game world, collecting power-ups and defeating any enemies along the way. Picking up items can benefit the player, either by improving their offence or defence of the character (i.e Health, armour, weapons). As you progress in the game, the story begins to unfold as to why the skeleton character is like this, what happened and how. This could involve cut scenes, or dialogue between characters.

The game will be set in levels, where you can progress to the next level once you have defeated the boss of the current level. The boss will be a defeatable NPC (Non-player character) which will leave behind a key to unlock the door to the next level. Beating the levels of the game world will be progressively harder, but each level produces new and better items, giving the player a better chance each time. This would add a challenge factor to the game, perhaps introducing a social element of the game as to how far a player was able to get before dying, or perhaps they made it to the end, etc.

I'd like to focus on an RNG (Random number generation) element of the game, to randomise the experience. For instance, if the player comes across an item, the item could be a select few items rather than the same one each time (For instance, you have items 1-6 possible, and the RNG lands on 5, so you get item 5, but this could be different for anyone). I'd also like to involve this into the enemies and boss NPCs that the player finds across the game world, where the game code will randomly select the enemy to appear rather than the same ones appearing each time. I could also add extra elements to this, such as making enemies spawn that are specifically harder if the player was unlucky enough to not find a certain item, presenting more of a challenge and learning curve perhaps.

I want to use the Fantasy art style for my game, as the nature of the game could be a bit macabre if the art style was photorealistic by comparison. This would also be much harder to produce accurately, and be more draining on performance. Therefore, I propose a simplified look for my game, so it appears to be detailed and convincing, yet unrealistic. I also want the character to remain in the third-person perspective, so the player can view the character better. This is so that when the character picks up new power-ups (such as a weapon), the user can easily recognise this visually. This also allows the player to fully observe the character if they so wish.

What am I creating for this assignment?:

I am going to be creating a playable prototype of a game based around the Roguelike genre. This game will be 3 dimensional, and will be played from a third-person perspective. This game will be created mainly in Unreal Engine, but other packages such as Maya may be used to aid in the development. This game will be specifically developed for use on computers, not for consoles or other devices. The game will be primarily tested and built for a Windows operating system, and will be packaged to support Mac users as well. The game will not support Linux operating systems. What will my prototype include?:

My prototype will involve a playable character which is able to fight at least one enemy or boss to demonstrate the combat mechanic the game will include. There needs to be one playable level in the game, and a system in place to allow the progression to the next level, although a proceeding level does not need to be designed for the prototype. There should also be a custom 3D character suitable to be used in Unreal Engine. Default animations are suitable, but an animation for attacking would be required at minimum. Finally, a basic representation of the UI should be displayed on screen, displaying some important information about the character stats and current level, etc. The graphics may be temporary for the purposes of the game prototype, but this UI should correctly scale to work on any size of monitor. Finally, the game must include a winning and losing condition. The winning condition will allow the player to proceed to the next level (for the prototype, this can be considered the winning condition), and the losing condition will be if the character dies.

Concept art: Character

Here is a simple sketch of what the character could look like. The character is a simple skeleton with a minimalistic style. The character will have eyes, but they will simply be 2 'orbs' that glow from inside the eye sockets, as opposed to eye balls. These could glow and offer lighting effects depending on whether this is feasible or not.

This is an example of perhaps how the layout of the first level will look like. The first level is intended to serve as a 'starter level' in which the layout and difficulty is very simplistic, allowing players to grasp the basic controls and mechanics, as well as possible strategies. This will be Level 1 of the game. The character will always spawn at the designated spawn point. This layout includes a boss room, as well as an exit point for entering the next level of the game, designated 'Stage 2' above. The crosses on the layout suggest where possible spawn points of enemies could be placed. Finally, the key to unlock the boss room can be found in the upmost room in the layout, used to unlock the boss room door.

I have drawn a more detailed design for the spawn location. The character spawns next to a coffin, linking to the character design. Furthermore, the 'starter level' or Level 1 will be set in the theme of an underground crypt. The idea is that - thematically - the character awakens from a coffin, and has to exit through the crypts he has been buried deep within. As a result, power-ups and enemies will also fit this theme. These themes will change across the levels (for example, Level 2 could be set in a graveyard in the outdoors, with thematically matching power-ups and enemies here too).

Here is a simple mock-up of what the UI could look like, but is subject to change. I have tried to keep the screen space as uncluttered as possible to keep it clean and to not unnecessarily block out portions of the screen. I have focused primarily on keeping the UI to a banner across the top portion of the screen (although this could be changed to a vertical banner across the side/s of the screen) so that all the information is easily accessible from the top of the screen, so that the user always can look up for information, rather than having to search the screen for the right section. The top of the screen includes the most important information, such as the health of the character. In addition, a small square at the bottom left (or right) of the screen will be dedicated to detailing which level the player is on, as well as the following level as well. This could indicate a bit of information, such as whether a key to enter the boss room, or to defeat the boss, is needed to progress.

Here are some examples of power-ups that could be available for the player on Level 1. These are primarily weapons/shields, and are thematically based around the first level being set in a Crypt. These involve parts of the skeleton fashioned into weapons or shields.

Brainstorming ideas for title of game:

I created a short list of terms/phrases that could serve as a suitable title for my game. Here are some of the initial options I had thought of:

- Cursed - Character is a skeleton but still alive, perhaps is cursed, links to title

- Bloodlust - Character defeats enemies, relates to title

- Eternal - Character is a skeleton but still alive, perhaps has eternal live, links to title

- Vengence - Character is out for revenge, relates to plot(?)

- Renegade - Character is the only one against all other enemy characters, links to title

- Reborn - Character is a skeleton but still alive, perhaps was reborn, links to title

- Coffin Fever - Character awakes from coffin, but this is unrealistic, title is a play on from the phrase 'cabin fever'

From these options, I decided Coffin Fever would be my choice in game title, as it sounds unique and interesting. I also liked the play on from the phrase cabin fever, suggesting the skeleton the player starts as has had cabin fever from being dead, and has now come to life.

Production: Creating the character

To begin the production of my assignment, I decided to start by designing the character for my game. I would stop after the character has been modeled and textured and then move on to the environment design.

To make my character, I decided I would use the 'high to low poly' method, in which you start by making the character in high poly and then convert it into a low poly character, for use in the game engine. If possible, normal textures could be used to add this detail back to the character, effectively 'baking' the detail into the character.

I would start in Z-brush to make the high poly character, as I am familiar with the program and it is capable of producing very high-detail sculpts quickly. I would then export this into Maya, in which I would re-topologise the mesh into something more suitable, with a relatively low poly count that can be handled easily and effectively by the game engine. I could then texture and rig the character in Maya, and export it for use in Unreal Engine.

Z-brush:

I started by making a very rough outline of what the character could look like in it's proportions. I did this using the ZSpheres tool to create the bone structure, and then turned it into an editable mesh, where I started to add a bit of indication as to which areas were what. You can see I have deliberately structured the body in an A-Pose to work better when rigged/skinned, as well as perhaps fitting the game engine better. I mostly used the standard tool to create a basic structure of the character, and then smoothing where appropriate. I was working with symmetry whilst doing this for a mirrored appearance, and will be using symmetry/mirror tools throughout the designing process.

Using this. I then began to create the parts of the skeleton bit by bit, so that the body parts would remain separate if need be. I did this by adding spheres (one per bone) and then using the move tool to put them into the correct shape. I then would use the clay/standard tools to add details to the bone, and then smooth them over for a clean result.

To create the rib cage, I used a secondary sphere as a boolean shape to effectively create a hole on the inside of the shape, giving the hollow inside of the bone. I did the same process for each 'rib' of the rib cage. I then created the rib cage in the same method from here.

Keeping them separate layers (or SubTools) was staying true to my original drafts, and I thought it looked better too. This would also make the individual bones easier to create. I used reference for a couple bones that were complex, such as the rib cage.

I continued the process, using reference for the skull and pelvis. It is worth noting that my character is not necessarily going to reflect true realism of a skeleton, and more of a recognisable human skeleton but stylized in my own style.

After some time, I had a full skeleton character. I had mirrored the legs and arms so they would match. I also added some extra details such as teeth and eyes, although the eyes would serve as a base for proper implementation. However, I continued to add more details to give it a more stylized appearance.

After some work, here is my final character design in Z-brush. The main change is to the skull, in which I have worked very hard on giving a stylized appearance/facial expression, which I think works so much better than the previous iteration. I mostly used the Standard and Clay tools to do this because they were useful to provide more defined detail in the model.

I've also added a small 'bone' for the hand (palm), a bit like I have done with the foot, which - in a real skeleton - would typically be several bones and bits of cartilage I believe, but for the purposes of my stylized character, this works best in my opinion. I also closed the fists of the hands, as I did not need to rig the fingers and therefore would be required to be closed before exporting from Z-brush, which was easy since they were all their own Subtools.

I then exported this file out of Z-Brush, ready to be imported into Maya.

Maya - Retopology:

I was able to import my character successfully into Maya from Z-brush. My task now was to re-topologise it into a low-poly model. I decided to use the Quad Draw tool to do this, and I enabled the 'Snap to live mesh' option so the new quads being drawn would snap to the selected part of the mesh. This meant it was a case of 'going over' the existing model with the tool.

I started with the skull, in which I started by adding the loops to the model. This was a bit more tricky to know where to add, as the head was not a smooth rounded shape as a typical face is, but I was able to create two important loops around the eye sockets and nose cavity. I did this with symmetry enabled across the X-axis to keep both sides completely matched, also saving time.

I then proceeded to fill in the necessary areas of the model. I also created a 'strip' of quads across the middle section of the skull to create a seam across the middle of the shape, something I wanted to do across the entire model to make UV-mapping easier later on.

After some time, I had completed the skull re-topology. I was able to get very clean topology thanks to the 'Relax' option of the Quad Draw tool, where it will try to smooth out the topology of the surface. I enabled the Soft Selection tool so I could relax areas of the model as opposed to a few quads at a time. Throughout this re-topology process, I tried to use the 3-spoke and 5-spoke joints at quads effectively. A good 3-spoke quad can be seen in the image above at the back of the skull.

I continued the re-topology for the jaw and eyes. This was done in essentially the same way. Though there were no loops that I could identify to make in either.

I decided to not re-topologise the teeth, because oddly enough, they were already very low poly, and all using quads too. I must have forgotten to add divisions to the teeth layer, as each bone/area was it's own sub tool in Z-brush, so I clearly missed this one.

After the skull was complete, I moved to the rib cage, arguably the hardest area of my mesh to re-topologise. I started by creating a loop across the middle of the rib cage, and then branched it out. I kept the poly structure the same, where you had 2 quads for the section with ribs, and then 1 quad for the gap.

I then repeated the same structure on the back, and then connected the 2 sides together. After a lot of time, I was able to connect all the quads to contain the entire rib cage shape. Though I did make a few mistakes in the re-topology here. Specifically, when re-topologising the ribs of the rib cage, I had missed a couple quads, so some would not form a complete loop around the ribs properly. Though this was only a minor issue, so I continued on.

I continued with the pelvis region of the character, which was also difficult to add loops for. I was only able to create a 'half loop' around the rear end of the pelvis, but this was still helpful.

I continued the process as discussed previously until this point, where I had completed the skull, rib cage, pelvis, legs and feet.

Here is a shot from behind the character too. Overall the detail in the shape has been preserved fairly well.

I moved on to the arms of the character, which were very easy to re-topologise, like the legs were.

Finishing the re-topology process, I did the hands and then the fingers and toes. To save a bit of time, I did one finger/toe and duplicated the mesh and moved them to overlap the other fingers. This is because in Z-Brush I had duplicated the fingers/toes and moved them around (and scaled them a little differently to match proportions).

Here is the final result of the re-topology, with the low-poly mesh over the top of the high-poly mesh. The re-topology covers the original mesh pretty accurately, only missing in a couple areas. When enabling the smooth shader in Maya (makes the mesh look high poly), the low-poly model looked almost identical to the high-poly model, which meant the process had gone well. And I had got the poly count down significantly, down to 8776.

UV Mapping/Texturing:

To UV Map the skeleton, I used the Planar tool first, which projected each part of the skeleton from a specified axis, which I most commonly used the Z axis for as this is the direction the character was facing. After doing this, I made seams in areas less noticeable in the mesh, such as the back of the skull (although after doing this, I realised this was not the best idea, as the character will be facing the opposite way of the user and therefore the back will almost always be seen). Despite this, my character wouldn't require much texture work, so the seams should not be too troublesome if in less optimal places.

After the UV Mapping was done, I started to add colour to the character, which I did initially with a simple lambert shader. I applied this colour to the skeleton mesh. I also created two separate shaders for the eyes and teeth, which I used blinn shaders instead. I did this because blinns allow reflectivity of light, whereas lambert shaders do not, which I felt would work better for the model. It should be noted that the character looks high poly, but this is simply the smooth shader from Maya to make the mesh look higher poly in the viewport only.

I was not personally keen on the colour of the skeleton, as I felt it was too plain and generic. I decided to use a noise texture from the options Maya provides, which is pretty customisable. I added a darker tint to the colour and also added some noise, to give some variety. I definitely liked the result, as it felt like more of a dirty and gritty model, which would also make more sense from a narrative point of view as the character is buried deep in a crypts for a long time.

Here is a close up of the noise texture applied to the model.

At this point I decided to work on the normal baking of the character. A Normal map is a texture which determines where certain areas of the mesh should be embossed. This is a widely used technique in not just games but other 3D media forms as well, as it efficiently adds more non-destructive detail into the mesh it is applied to, and this is especially useful in a game engine where performance is key to a good playing experience.

I started in Maya by placing the high poly character over the top of the low poly mesh. I then went to the rendering shelf, and under the lighting/shading menu I used the Transfer Maps option. This brings up a menu which can be used to create a Normal map from a high poly mesh which can be baked on to a low poly mesh. The idea being, once completed, all of the detail from the high poly mesh will be applied to the low poly mesh but in the form of a texture rather than more quads.

Unfortunately even though I had used all the settings correctly, the baking process did not work. In the image above you can see the result of the low poly mesh with this texture applied which was generated by Maya. You can see that the geometry does look considerably smoothed, however there are these blocky outlines across the mesh. Unfortunately I was not able to find a solution to this problem.

I decided to try a third-party program called xNormals to do the same process, taking the exported high poly mesh and low poly mesh into the program in order to bake the detail into the low poly mesh as done before. My hopes were that this program would give the result I was looking for. Although like before, the problem seemed to persist. This tells me that perhaps the problem lied elsewhere, and could be to due my mesh. My theory is that the UV map was so densely packed into one UV tile that perhaps this caused the baking process to not work correctly, because the normal texture relies on the UV map. I have never used UDIMS but perhaps this would have fixed the problem. However, for the purposes of the game prototype, it was decided that the low poly mesh in its current form would be suitable for the purposes of this assignment.

I have decided to not complete the rigging stage until later in the project. I am not very familiar with Unreal Engine, as this will be my first project in any sort of game design. I am aware of a method in which you can retarget animations from the default character in Unreal onto my own, but as I am not absolutely sure, I am going to work on the environment and props for the time being, and then come back when I have had time to explore this method properly.

Game Map/Prop design:

Game Map:

To begin the game map, I created a basic outline of the world layout using my concept art as a base for this. I created this in Maya only this time, as opposed to creating it in Z-brush as I felt that Maya would be suitable for creating a game map to the level of detail needed. I also did not feel like it would be worth retopologising the map either. I started by creating a box and simply extruding the side of the shape to create a basic outline of the map, and then filled in the floor using the Quad Draw tool. I coloured the floor black with a simple material just so I could easily differentiate the floor from the walls, as they were part of the same mesh. I also created some very basic outlines of the doors in the map so I knew where they would go. I had also created a simple coffin model in the level as seen on the bottom-left of the image, which is a very simple low-poly model.

I then removed all of the excess quads which would not be visible from the inside of the game map, which would otherwise be unnecessarily rendered when unseen, and removing them is consideration of performance. I then started to add a roof to the game map, again opting to use the Quad draw tool. This was especially useful as you can draw a line of quads by holding the TAB key and dragging with the middle mouse button, which sped the process up significantly.

I continued the process in the central-most room. I decided to make this room larger and more open so I could allow more enemies to be placed here, so the player has time to see them coming.

I finally finished the process of making the game map 'watertight', filling in all the gaps so it was a completely filled in mesh. I have hidden the doors here for the screenshot.

On the topic of the doors, I moved on to creating the doors in my map. I had to keep the door and door arch as a separate mesh, so that in the game engine I would be able to create a sequence for the door lifting upwards. I added some subtle details such as the keyhole to the door, but the majority of it remained very low poly.

As the door was already very low poly, I decided that it would be worth adding some slight extra topology detail to the mesh, which can be seen in the above image. This only added 24 quads to the mesh, which I would argue is an insignificant number.

I proceeded to work on UV mapping the game world. I did this by first using the Planar function from the Y axis. I then created a seam around the floor of the map. The floor would have a separate texture, so this would be necessary, and conveniently offers a very efficient UV map as well. For the top half of the game map, I had to use the Unfold function, which helped to expand out the areas around the sides of the map which would otherwise be unseen from the Y axis planar.

I was able to find a suitable free texture online which would serve as a dirt texture, which I added adjustments to in Photoshop before importing them into Maya. I deliberately sourced a seamless texture because I anticipated a problem, where the texture tiled once would be very low in detail and pixelated. However, by using a seamless texture, I was able to adjust the UV tiling options in Maya to place the texture repeated multiple times to solve this problem.

I repeated the process for the floor texture as well.

In Photoshop I was able to create a custom golden texture for the keyhole slot. I did this with a simple gradient and overlay. I also was able to apply a free wooden texture to the door, one which I freely sourced and extensively modified before applying it to my project. I did the same for the door on the other side of the map, but I specifically applied a silver colour to the key hole instead.

My level would require two keys, one to enter the boss room, and another to proceed to the next level. I started by creating a very basic shape of a key (right-most shape), and then duplicated the key twice. I then added some extra details to the keys to make them look more interesting and appealing to the viewer.

I then easily UV mapped them by taking a Planar from the front of the keys (X axis) and then created a texture for each key in Photoshop. I specifically coloured the base of the key gold/silver to match the keyholes of the doors respectively. I designed them specifically to communicate what they could lead to, where the boss key (right key) looks very intimidating and aggressive, whereas the next level key (left key) looks like it is old, tarnished and has come from the boss of the level.

Finally, I needed a light source for my game world, so I decided to create a simple oil lantern, as I felt this would be the most fitting prop to populate the environment with. I was sure to keep the oil lantern low poly, but I needed to make the lantern hollow on the inside so I could place a light source inside it in the game engine. I then proceeded to model and texture it like I had done prior.

Character Rigging:

After taking another look at how I could animate my model, I discovered that a good way for a prototype could be to retarget the default animations on to my character. However, doing this requires a rig very similar to the default rigged character. A quick rig does this quite well, so I opted to use this. However, the default quick rig in Maya did not work initially, as you can see in the image above, where all of the joints have not correctly attached to the character.

I therefore opted to use the Step-by-Step method of quick rigging, where Maya still creates a quick and simple rig for you to use, but you can adjust where the joints are placed, as well as how many and some other options. Upon starting the quick rig, you can see where the automatic process went wrong, as the green dots represent where the program expects the joints to go. I manually adjusted the positions of all of these joint locations.

I was then able to use these joint locations to to create a quick rig from this, and then skin the mesh. However, the skinning of the joints were not ideal, as the joints would bend across an area of the mesh, but this does not work for my skeleton model as the joints don't stretch like skin or muscles, which is the assumption Maya has made. I used the Paint Skin Weights to manually adjust the skin weightings of all of the joints.

Once this was done, I needed to export the model into Unreal Engine. To do this, I used the Game Exporter option build in Maya to make sure I correctly exported the character. For example, Unreal Engine is primarily designed to handle FBX formats, which is something this exporter takes into account.

Importing/setting up 3D assets into Unreal Engine:

Game world:

To begin, I started by importing the assets for the game world of my prototype level. Upon importing and placing the map, I got an error regarding the coffin lid that I had created. For some reason the coffin lid had been corrupted in Maya, so Unreal would not accept it. To fix this, I simply re-created the asset in Maya and re-imported it. This did not take long as the asset was very basic. I also got the errors of unsupported maps, which was due to overlapping UV maps. For my keys, I had not copied the back half of the key, and simply left both the front and back halves as one UV shell (overlapping). I thought this would be more efficient, but this clearly caused issues. Fortunately the issues were very minor, so this was something I did not feel I would need to rectify.

Also when importing my files, I created a Custom folder so I could easily manage my custom files that I had imported separate from the default assets available. I then created an Environment folder, and placed all these assets in there.

With the lantern models, I had created a transparent material in Maya. Unfortunately this did not carry over in Unreal, so I had to edit the texture to have an opacity value of 0.1. I did this by adding a constant to the blueprint, and editing the value, which I then connected to the opacity slot in the material node.

This meant that I could now place a point light inside of the lantern to light up the environment, replacing the directional light that is placed in the left by default. I parented the point light to the lantern, and proceeded to populate the game world with these lanterns.

One detail I had forgotten to address was the roughness values of my materials. This adjusts how much light is absorbed versus how much is reflected. Looking in the image above, you can see that the ground material absorbs the light quite realistically. However by comparison, the walls are reflecting it, and this trait does not properly represent the material. Therefore, I went into the material editor and adjusted their roughness values until I got this to where I was happy.

After all of my objects had been placed in the game world, I needed to work on collisions of all of my models. This determines where the player will collide with the model, as without these the character would just walk through it. Some of these automatically generated collision boxes were fine, but some such as the one for the coffin were not ideal, as they did not cover the model very well.

I was able to rectify this by removing the automatically generated collision, and add a few custom ones, which I did by adding a box collision and rotating it to fit the model better. I decided to allow the player to jump inside the coffin to add some character to the game world.

The most complicated collision to create was for the game world, which was significantly bigger and required many smaller rectangle collisions. The floor was simple as it is flat, so this only needed one plane, but the walls required many as they are very uneven, and it can be frustrating being blocked off by an 'invisible wall', so I wanted to avoid this complaint with a more thorough collision in my map. However I feel like this was done very inefficiently, and would hope to use a more efficient method for this in future, as I would be surprised if this was the correct method for this.

Character imported:

I proceeded to then import my character into Unreal, which I placed into its own custom folder. Arguably this would be put into a folder of its own for the specific character, but I deemed this unnecessary because my prototype would only feature one character, albeit with an enemy character with an adjusted material. Because I imported the character as a skeletal mesh (automatically selected because the character FBX file had a rig attached), Unreal Engine had translated that extra information into 3 files: Skeleton, Skeletal mesh and a Physics Asset, which will be useful in the animation stage.

My plan was to retarget the animations from the default character Unreal provides and apply those on to my character, which would save me time creating my own animations, something I had not done before either so I was more accustomed to this method. To start, you need to open the ThirdPersonCharacter skeletal mesh, then head to the 'Retarget Manager' at the top of the screen.

To do this, you need to have the characters targeted to the 'humanoid rig' in Unreal, so the engine can place the joints of the target onto the generic rig it has. For the 'ThirdPersonCharacter', this was very easy as the character is made for the engine, so all of the joints are named exactly the same as the joints of the 'humanoid rig'.

I repeated the process for my custom skeleton character. The process was the same, but the joints of my rig were not exact to the joints of the humanoid rig. For example, the lower leg joint in the humanoid rig is called the Calf, but the quick rig from Maya would name this the lower leg. So unlike the ThirdPersonCharacter, you need to manually go through all of the joints and assign them to what the humanoid rig has, which are the same joints, but named differently.

Once this is done for both characters, you can then retarget the animations from the source character (ThirdPersonCharacter) to my custom character. To do this, I right clicked on the idle animation to test how this looked.

This brings up the retarget menu, where the source character is already selected. I then selected my character from the left hand side, and retargeted the idle, walk and run animations.

Unfortunately, you can see the process does not work if your characters rig does not match to the source character of the animations, as the characters shoulders have clearly not mapped correctly, despite the rest of the character working correctly. From this editor I also realised the eyes had for some reason not loaded or exported correctly, as they were not there. I decided to address this issue after the faulty animations.

I decided to seek help from my lecturer about the issue, to see if there were any solutions to the problem. Whilst I did this, I decided to complete my setup for the animations for the character. When the character transitions from the idle to walk to run cycle, it uses a Blend Space. I created a custom one for my character.

This is the blend space timeline, with the green dot representing the current previewed speed of the character. The left side of the timeline is 0 speed (stationary), and the right is 100 (max speed, running). What the blend space does is determine the animation the character should be using depending on the speed of the character, and blends between the animations.

To use the blend space, you need a state machine to utilise it, and as I was not familiar with state machines or how to create my own, I opted to use the ThirdPersonCharacter state machine by using the same retarget option as before.

Now all of this has been setup, I would be able to replace the default character with my own custom one. I did this by first selecting the ThirdPersonCharacter in the game world, and replaced the mesh on the right side of the screen. You will notice the mesh is in A-pose, because the animations are not selected for this skeletal mesh.

I was then able to select the retargeted state machine (also known as the AnimBP in the workspace) in the player controller mesh once I had replaced the mesh with my own. This had effectively replaced the ThirdPersonCharacter with my own custom character, with the replaced animations.

Here is a screenshot of the character being moved in the game world, correctly blending to the run cycle when moving (using a keyboard).

I tried to work out the reason the character was not working with the retargeted animations. I placed both characters side-by-side in their windows to try to diagnose the problem, as the pose was very similar. I narrowed down the cause being that the rig of my character was not close enough to the humanoid rig.

I tried to adjust the joints of my character in Unreal to try and resolve the retargeted animations by editing the default pose. I tried to match the joint as closely to the ThirdPersonCharacter (which basically has the same rig as the humanoid rig) with both characters side-by-side, to see if this would fix this problem.

To update the change, I had to modify the pose to use the current pose, the one I had just edited.

Unfortunately this did not resolve my issue, so I decided to reach out to my lecturer for help with this issue, due to my inexperience with the engine. I decided to move on for the time being to make good use of time whilst waiting.

Level Progression & Sequences:

For my game, I wanted a system in place that allowed the collection of keys in the level. This would allow the player to progress through the level, to reach the checkpoint that would begin the next level of the game. To start, I needed a system where a player would 'collect' a key. I began by placing a TriggerVolume, an invisible shape which can be used to recognise if a player has overlapped it or not. I gave it a volume which overlapped the key, with a little bit of extra room around it.

From here, I added a new event to the TriggerVolume 'OnActorBeginOverlap', which would send a signal when the actor had made contact with the TriggerVolume. I also named it appropriately so I could easily recognise this was going to be the TriggerVolume for this specific key.

This takes me to the level blueprint, which only operates for this level of the game. I started by creating a boolean, a variable which has the values of true or false. I set the default value of the boolean to false.

I added the variable into the blueprint so that when the character overlaps the TriggerVolume, the variable would be set to true. This would be useful in the next section of the blueprint. Once the variable had been updated, the key would be collected, but this would not be recognised to the player, because the player would just walk up to the key and have no indication the key had reacted. Therefore, I added the node 'DestroyActor' which removes the targeted object from the level when signaled, which would only be signaled when the TriggerVolume is triggered. I then got a reference to the boss key in the level, and set this as the target object. Finally, I added a print string for a message to the user. Typically this would be included in the user interface, but for the purposes of the prototype, I felt this was fine.

The next step was to allow the key collected to open the door in the level. I again opted to use a TriggerVolume here, so the door would open when the player overlapped it. I gave the door a larger TriggerVolume so it would conveniently open from a bit of a distance, so the player would not have to walk up and hit the door with their character for it to slowly open instead, just making the game a bit more convenient for the player.

I then went back into the level blueprint, creating an event for when the trigger volume is overlapped by the player. I added a branch node after this, which is an If condition that determines an outcome whether the condition is true or false. This is where I use the boolean from earlier, which will tell this section of the code if the player has collected the key or not. If this boolean is true, the true part of this node will fire, which will lead to the door opening.

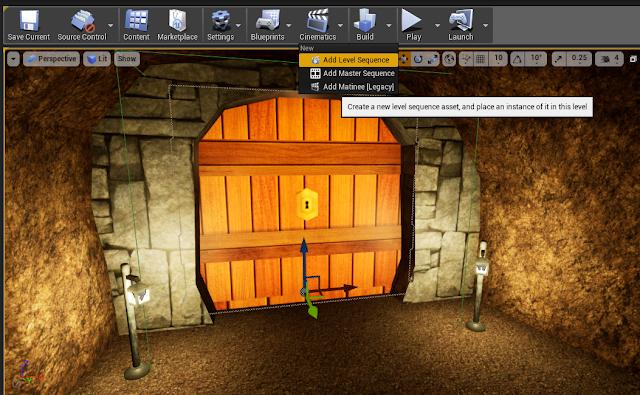

To open the door, I needed to create a level sequence, as I wanted the door to slide upwards and actually open, rather than just disappearing like the key does. To do this, I went to Cinematics and added a level sequence.

From here, I can use the sequence timeline. To create one for the door, I needed to click on the door first, and then create a new track and select it in the top of the menu, which adds it to the timeline.

I then made keyframes for the position of the door, so after 4 seconds in the timeline passes, the door will slide up and out of view.

Now that I had a sequence, I could then play this from my level blueprint. Using the part of code created earlier, I used the true branch to cause the sequence to play, which I created by first creating a reference to the sequence titled 'BossDoor2' and then creating a Movie Sequence Player off of that. I also created a simple print string to state to the player that they don't have the key to the door if they overlap the TriggerVolume when they don't have the key, or rather, when the boolean is false. This is also useful simply to test to see that this part of the code is working correctly.

Here is a video of me testing this part of the code. The character is still not fixed at this point, but this was to test out the sequences. You can see that the key is collected and functions as intended. However, when the door opens, there are two problems. The first is that the door opens a little late, so you end up bumping into the door, or having to wait for it to open. The second is the more pressing issue, where the door will open and the sequence will play, but after the sequence has finished, the door will revert to its original state. These are two problems I intended to fix.

I began by addressing the second issue mentioned earlier, where the sequence would reset after playing, which is not what I wanted. I wanted the door to remain open after being played. This is a relatively simple fix, where you simply need to adjust the properties of the sequence so that the sequence will be set to 'Keep State' where the state will remain at the end of the sequence. The project default will restore the state of the sequence, which is why this problem was occurring.

I was also able to easily re-adjust the size of the TriggerVolume so that the door would open from further away, adding convenience to the player.

I repeated the steps for the other door in the level, which leads to the next level of the game.

Upon testing this amended code, I noticed another problem, where you could re-activate the sequence even if the state was set to Keep State. This is because the character still hits the TriggerVolume on the way out of the boss room, and thus triggering it twice. To fix this, I had to use the DestroyActor node again, but the target was the TriggerVolume which would therefore no longer be present in the game after the door had been opened.

To demonstrate the progression, I wanted to implement a mechanic for the player to progress to the next level. For this, I created a new level from one of the templates. The level needs nothing in it, it just needs to be a level to demonstrate that the player can progress from one to another.

To progress to this level, the player will need to hit this new TriggerVolume, placed directly behind the opened door to the next level.

I then used a simple bit of code where the TriggerVolume actor overlap caused the next level to be opened. In hindsight, what I could have done to prevent possible abuse would be to only allow the TriggerVolume to be activated if the key had been collected. So for instance, if the key to the next level had been collected, the same boolean that let you open the door would let you enter the next level. However, this should not be abusable, since the collision of the map completely stops you from getting to this TriggerVolume, so for the purposes of the prototype this is fine. It is just something to keep in mind for the making of a fully developed game, where you have to be careful of abuse or glitches that could cause abuse or unintended methods of progression for example.

Enemy AI Character:

For my game, I wanted to have some form of attackable enemy to demonstrate the combat mechanic I wanted to implement. To do this, I needed to first implement a form of AI which would seek the player. This can be done in Unreal Engine quite easily, but for the AI to locate the player, you need to add a Nav Mesh into the map. The area must cover the entire of the map (or where the AI character is expected to be able to navigate to), which can be seen in the image above.

To create an enemy character, I simply duplicated the blueprint class of the ThirdPersonCharacter so I could edit the AI_Enemy as a separate entity.

Editing the blueprint, I went into the viewport section, and deleted the unnecessary components such as the camera, which would not be needed. I then added a Pawn Sensing component, which would be used to allow the AI enemy to track the playable character.

Here is an example of the radius of the Pawn Sensing component. The green circle is the most important, as it dictates the sight radius of the AI character. Typically games will have the sight radius to around 70 degrees, which is roughly the same line of sight as a normal person can see. For the purposes of editing, I used 70 degrees so I could test mechanics, but for my game I wanted to use a radius of 360 degrees as I wanted the enemy AI character to detect the player from any angle. This is because I was not looking for realism or perhaps stealth in my game, at least for not this particular enemy, and this could be heavily abusable since I was not planning on implementing any sound mechanic which would let the enemy AI know if the playable character was close, so the player could easily abuse their AI. Also the enemy would not be difficult, being the starter level. So I went ahead with this decision.

To begin, I went into the event graph of the enemy character and added an event for the Pawn Sensing component created earlier, for when the character could see the player (when the player was inside the sight radius).

I then added a cast to ThirdPersonCharacter (my playable character), and then an AI Move To node. Essentially this causes the enemy character to chase after the playable character, but only if the AI character can detect the player within it's sight radius.

To make this AI MoveTo node work, I needed to get a reference to the playable character and the enemy character, so the node knew what to chase and what was chasing it, as the previous image just causes the node to fire, but not what to target. I got a reference to self for the Enemy AI and used the Cast To node to reference the playable character. This caused the Enemy AI character to chase after my character when testing this in the viewport.

After testing the code, I realised that the speed of the enemy character matched that of the playable character, which made it very difficult as you could not outrun the character. I therefore changed the speed to something more suitable which would give the player a chance to outrun the enemy character, whilst not being too easy to beat.

I also changed the enemy AI character's mesh to my custom skeleton character (left character in image) and also added the animation blueprint as well, so the character would be animated. I also changed the character's material to a different colour of my character to make it differentiable.

User Interface: Health of playable character:

I now wanted to implement a health mechanic, starting with the playable character. To test this was working, I decided to make a start on the user interface which would display the character's current health on screen. To do this, I created a new Widget Blueprint.

This is the screen when editing the blueprint. The flower in the top left represents the anchor point of the objects placed, which can be changed. This is useful when changing to different resolutions of window sizes and screen sizes, as it means objects will generally stay in the same position if anchored correctly. I wanted the health bar to appear in the top left of the screen, so I kept the anchor point in the top left. I also created a progress bar which would be used to display the health. The design is temporary to test the health mechanic for now.

To actually create the widget on screen, I needed to draw it using a blueprint. To do this, I used the Create Widget node and selected my created widget in the class drop down box. I then promoted it to a variable for reference, and then added it to the screen with the Add to Viewport node. I had used the ThirdPersonCharacter to do this, although in hindsight I probably should have placed this under the level blueprint instead as better practice, but this would not have a largely negative impact on my project.

Upon compiling and testing the code, you can see the bar has been drawn on screen. However there is no associated information with it, it merely just shows a blank progress bar.

To implement health into the game, I needed two variables, the current health of the character and the character's maximum health. These were both float variables, with the variables having a default value of 100. These were set in the ThirdPersonCharacter Blueprint.

Under the HealthBar widget, I went back to the progress bar and found the percent option in the settings. To show this, I added a colour for the progress bar. To have this value change depending on the health of the character, I created a Binding on this percent option.

To reference the variables, I started with a Cast To ThirdPersonCharacter, which essentially says 'perform this action as this object'. To reference the object, I used a Get Player Character node.

I then was able to get the values of the variables from the ThirdPersonCharacter blueprint from within this binding graph.

To correctly get the value of the current health to display in the progress bar, I needed it to be in the form of a percentage, so I used a simple Float / Float node to divide the Current health by the Max health.

Here is the result. As you can see, the progress bar is now 100% red, because the default value for Max health is 100, and the Current health is also 100 health since there has been no change to the variable, causing the value to be 1.0 in the percentage bar equaling 100%, causing it to be fully red.

To implement a damage system to the health required an Apply Damage node, hooked up to the AI ModeTo node created earlier in the enemy blueprint. This would only apply damage if the enemy character successfully reached its target, being the playable character. This also needed a variable to determine the damage dealt, to which I created a simple damage variable with a float value of 10.0 as the damage it would deal. This is bad naming conventions for this variable, but as this prototype would feature only one enemy, this was fine for these purposes.

Back in the ThirdPersonCharacter blueprint, I used an Event AnyDamage node to create the trigger for my damage being dealt. This also stores the damage variable from earlier, which I can use to calculate the damage dealt. To calculate this, I used a simple Float - Float, subtracting the damage from the current health, setting the new current health of the character after this calculation.

With a damage system, you ideally need a death mechanic, which is something you can handle with an Event Dispatcher, which is a handy way of notifying other parts of code when an event has occurred.

From the end of the Set Current Health node, I attached a branch, to determine whether the health has reached less than or equal to 0 health (0 health being when the character has died). This would trigger the event Player Died.

I wanted the enemy AI characters to stop when the player had died, so to do this, I used this code to set this up. This is done in the AI_Enemy blueprint, which needed another Cast to ThirdPersonCharacter node as this was where the event had been created. This event being triggered in the ThirdPersonCharacter blueprint would cause a new event named 'Reset_AI' to fire.

This Reset_AI event would cause the AI character to stop sensing the player, and therefore stop all movement and attacking. I got a reference to the Pawn Sensing component from earlier, and dragged this into a Set Sensing Updates Enabled node. This node will determine if the Pawn Sensing component attached will continue to update, and because the boolean at the bottom of the node is unchecked, if this event fires, the AI will no longer update, and will stay stationary. My plan is to later create a death screen for when the player has died, and having the characters continue to move around and attack would not be ideal.

Custom character: Fixes and Animations:

I wanted to continue with the damage system for the player and AI character, but could not do so until the character had been fixed, as I needed to create custom attack animations. Unfortunately at this time I have not received any reply for how to fix my current character in the Engine, so I have decided to go back into Maya and fix the character there. I also needed to create the Animations, which would be done in Maya also.

One of the issues I had was that the eyes were disappearing when binding the skin to the mesh. In the demo video linked earlier in this blog, you can see the eyes float behind the running animation of the character. I had tried to import the character with eyes set as a parented constraint to the head controller of the Maya rig, but this did not work because Unreal does not use any controllers and simply uses the joint information. After some experimenting, I found out the problem seemed to be that the eyes I had were so small and far from the rest of the mesh, that perhaps Maya was not recognising it, so it was either being hidden or removed. I was able to solve this problem by increasing the length of the eyes, and placing them closer to the eye sockets in the skull. I also reduced the depth of the eye sockets too, and this did the trick.

Now the main problem was the fact that the skeleton would distort when animated with the default Unreal Engine animations. I determined that my rig was not close enough to the rig of the humanoid rig in Unreal, and would always cause problems. I think that this was because I had created my character from scratch, and not used any reference to create human-like proportions as I was simply creating a fantasy-art style character, and not one of realism, but this was a detail that I totally did not think of nor realise would be an issue until now. This meant that I would need to create my own custom animations for my game, as well as an attack animation. I therefore made the decision to create the following animations for my character:

- Idle cycle

- Walk cycle

- Run cycle

- Attack (slow for Enemy)

- Attack (fast for Player)

This would be a total of five animations required. I decided not to create my own custom jump animations because these would not be required for my game prototype, and the animation problems are less noticeable when jumping too.

I proceeded to create all these animations in Maya. As seen above, I was using reference for the walk and run cycles as these were arguably the hardest to produce, so having reference is always helpful. I didn't follow it exactly, as I was mainly using it for the poses in the animation. I also tried to keep the frame range of the animations similar to that of the default animations in Unreal so that the speed of the character would not cause the animations (walk/run) to look off. I also made the Punch animation twice as long as the Slash animation as I wanted the enemies to attack with a slower attack, which would therefore need a slower animation.

When exporting the animations, I again opted to use the Game Exporter from Maya. However this time I used the animation export tab instead, as it enables some important settings, such as the frame range. One incredibly important element that this provides is the 'Bake Animation' option. Unreal Engine will use animation that is on the joints, not the controllers, and this will effectively do this process for you when exporting, as otherwise the animation will NOT carry over into Unreal.

When importing these animations into Unreal, you are prompted on how you want to import them. I had deselected the Import Mesh option, keeping the Import Skeletal Mesh on, so that only the joints would import (with the animation baked), as the mesh was already in my Unreal Project. The engine then gave me the option to apply the animation to an existing skeletal mesh, which I did for my skeleton character. This applied the animation to my character immediately, and I proceeded to do the same process for all 5 of my animations.

Player attack animation:

To begin, I needed to create a mapping for the key I wanted to use, for which I decided to use the left mouse button, which is a very common video game trope across genres. I did this in the Project settings.

I next went to my character state machine, where the default state machine had been created already. I wanted to add a new state to my state machine to blend the character from an idle/running state to an attacking state (when the button had been pressed), so I started by creating a new state and adding it into the flow of states.

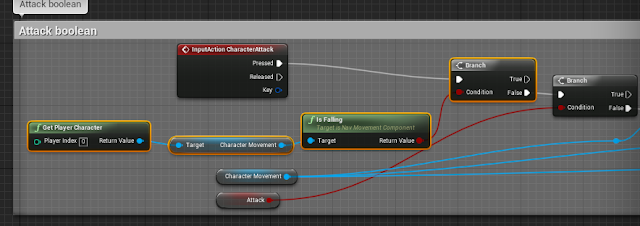

I then went into my ThirdPersonCharacter blueprint, and added my LMB as an event in my graph. I then used this to set a boolean to determine if the character was attacking. I then used a delay which would correspond to the length of the animation of attacking, and then used the same boolean but set it from true to false. This boolean was primarily created so that I could use it in my state machine to determine whether the transition from idle/run to attacking should take place/revert.

Back in my state machine, I added my attack animation into the graph, and dragged this into the output animation pose node. This would tell the state machine which animation to play when the transition condition is met. It should be noted that I used the animation SkeletonCharacterSlash for this state machine, but the screenshot features the incorrect animation.

Still inside the state machine, I switched to the event graph. Here is where I could set the variable the state machine transition would use to start the attacking animation. Because the variable Attack was inside my ThirdPersonCharacter blueprint and not the state machine blueprint, I needed to use a Cast To my character node, so that I could reference the boolean here, where I was able to set a new boolean to the same value. This is because I can only reference the character boolean here, and not in the transition, so this was the way in which I had to do this.

To finish, I went into the attack transition and used the new Attacking boolean I had set just earlier, and dragged this into the Result node. This would determine if the transition should begin or not, and would be activated to true if the LMB had been pressed. For the transition back from attacking to idle/run, I used a similar code, but in between the Attacking boolean and Result nodes, I added an == Boolean node, which would check if the result was true or false, and if it was false, it would enter the transition back. This completes the loop in the state machine, making it work as intended.

Now I wanted to add a constraint to my attacking animation, as I did not want the player to attack if the animation was already underway, as the player could abuse this and just constantly click the LMB. To prevent this, I added a Branch node which I fed the value of the Attack boolean variable. This would only fire if the Attack boolean was false, which meant that you could only attack if you were not already attacking, which solved this problem for me.

To test this, I added my new state machine to my main character, and also added a duplicate for my enemy AI character just for testing purposes for now (this would be modified later). I did this in the main editor in Unreal, on the right hand side after selecting the character in the game world.

When testing the game, not only can you see the animations have successfully been applied, but the issues regarding the eyes and joints being deformed no longer persist! I had also updated the material texture on my character on the left, as before it had not been correctly applied. Finally you will notice my main character is wielding a weapon, which was easily done by first adding a socket to my skeleton hierarchy in the skeletal mesh, and adding a socket on the right hand. I then went into the ThirdPersonCharacter editor and attached this by first spawning the weapon on the Event BeginPlay, and then using the Attach To Socket node to attach it to the socket made earlier.

Player attack ability:

To allow my character to attack, I needed to first detect whether or not the character had successfully hit. I decided to do this by using the weapon I had created, where I drew a box around the mesh, by creating a new Capsule Component from the menu in the top left. This box would be used to determine the 'hitbox' of the weapon, where if the hitbox overlapped the enemy, it would result in a successful attack.

I then went into my AI_Enemy, creating both an event and reference to the new Capsule Component I had created. This means that the following code would only fire if the capsule overlaps the enemy.

I first casted this node to the weapon I had created earlier, so that the capsule would follow. I then casted this to the ThirdPersonCharacter so I can reference the Attack boolean. This finally leads into a branch, which takes the Attack variable and will only fire if true, so the player cannot simply walk up towards the enemy and deal damage without hitting the LMB.

The next step was to apply the damage if this condition was met. I used a Sequence node to split the nodes just for easier use. I proceeded to take the variable of the current health of the enemy character, which I had set the default value of to 30. I then used a Float - Float node to remove 10 health if the condition had been met, 10 being the damage the character dealt. I then created a Float <= Float node to determine if the enemy character had been taken to 0 (or less) health, where it would be considered dead. This being true would cause the enemy character to be destroyed via the DestroyActor node, removing that instance of the enemy from the game.

Back in my ThirdPersonCharacter blueprint, I wanted to prevent the animation from playing multiple times, as the constraints applied earlier stopped the character 'attacking' the enemy, but moving the character. Otherwise the player can just run around the map and attack in a much larger radius than what it should be when the character is stationary and attacking, it gives a huge advantage. Preventing movement also gives the player's attack more skill when using it, so they don't become stuck in a difficult spot. I therefore added these two nodes, Disable Movement and Stop Movement Immediately in between the Branch and Set boolean nodes from earlier. At the end, the movement is resumed via a Set Movement node, which allows the player to move again as usual.

After testing my code from here, I noticed a problem, where the character could not jump unless they were attacking. This was a fault in my state machine, where I needed to have the attack state as a separate state to the jump cycle, so the character can perform one or the other.

This also raised a new issue, where the player could attack whilst in the air, but this would look wrong as it would stop the jumping animation and begin the attacking animation, because the state machine can be interrupted to suddenly attack. To prevent this, in my ThirdPersonCharacter blueprint I added another branch, which checks if the character is falling, and if the character is not falling, then the player can attack, which solved this problem. After this, the player attacking ability worked completely as intended.

Completing character: Eye emision

For the eyes of my character, I had waned the eyes to glow, rather than be a physical entity. To do this, I needed to create an emissive material which would replace the current green material for the eyes. I started by creating a new base material, which would have the values added to create the emisive material. This used a Colour parameter and an Emision node, which would be multipled and fed into the Emisive colour of the material.

I then created a material instance off of this material base, so that I could feed these values into the previous material shown above. The purpose of this is to easily preview and create the emision material.

From here, the parameters from earlier were available here. From here I simply modified the colours and emision values until I had a result I liked.

I then applied this material to my character to get this result. I repeated this process but for a red emisive material to be used in my enemy AI character, with red being a conventional colour for enemies in a game.

Main Menu:

Setting up the interface:

For my game, I wanted a main menu, which would give the player a few options but most importantly the ability to start the game, rather than being immediately thrown into the live game. This gives the player to grasp the controls, their settings correct etc. I started by creating a new HUD Class.

Once this was done, I edited the HUD class blueprint to be drawn immediately when the event was played. However, this would not fully work because this would simply draw it over the screen of the game. Therefore, I needed a new level to serve as the main menu. This would also be efficient as it would not unnecessarily load the game in the background whilst loading the menu of the game. I simply created a blank template from the preset maps Unreal Engine offers, and named this MainMenu.

To set up the settings correctly when starting the game, I needed a specific game mode for the main menu to run off of. I simply copied the existing game mode from the default template I had started in, and renamed it.

I was now able to place this in the world settings, where this game mode would be the initial game mode when starting this game.

Main menu UI:

For the main menu itself, I started by creating a new Widget blueprint. I placed some Vertical Boxes in the area, which were very useful as they would hold several buttons in the designated area. I created three of these, as I wanted my main menu to include the main menu itself, an options panel and a controls panel, which means I need three sets of buttons to complete the interface. Buttons are especially useful as they can be easily binded in the editor to perform an action when they are clicked (the OnClick Event). It's worth noting that I was using the anchor points correctly throughout the designing of this UI.

Once I had finished the main menu design, I turned all of the buttons and vertical boxes into variables, so I could easily reference them in the Event Editor. I was able to set up some simple code to easily transition between the menu options and main menu by setting one set of buttons to hidden, and the other to visible when clicked. This required a reference of the sets of buttons, which I could do as I had set them as variables just before.

I then did the reverse of this when the return button had been pressed to let the player return to the main menu, creating a loop. I did the exact same for the Controls panel as well.

For the Quit game button, I simply added a Quit Game node to this, which would exit the game. And for the Start game button, I added an Open Level node to open Level1, which was the main game world I had been working on. This is why it was helpful to have my menu as it's own separate level, so I can easily transition to the new level.

For the options panel, I wanted to give the user the ability to change resolution so the game would suit the monitor they were using a bit better. This could have been more extensive, but I felt this was fine for the purpose of a prototype, and this menu is very dynamic too so its very easy to add more buttons and options here later if I wanted to. I used a command to do this, as it was a very simple solution and easy to represent in the Event graph.

Pause Menu:

I think a pause menu is equally important, because not only does it give the user a chance to take a break if needed, but they can also check the controls, options, or exit the game quickly if needed, adding convenience. I started by creating a new Widget blueprint for the pause menu, and designing it in a similar fashion. One extra detail I added was a special effect available which would blur the background of the screen, which I thought would work nicely as it would not block the screen but would easily indicate the pause menu was active, just in case perhaps the user thought the game was not truly paused but instead perhaps an overlay.

To start, I wanted to prevent the character from moving or clicking in the game. Otherwise the pause menu could be abused, and the player could move around or attack whilst paused, giving a huge advantage. It would also be a bit distracting if this was the case as well. To start, I worked on the resume button which would do the opposite, and did this within the Event graph of the Widget. I dragged a node called Set Input Mode Game Only from the event OnClicked when the resume button had been clicked, which would get the inputs to in game only, preventing any UI clicks. This would also disable the mouse cursor from being visible on the screen (this is off by default). I then used a Remove from Parent node to effectively remove the pause menu from the screen, which then leads directly to a Set Game Paused node which is set to false, which would complete the process of unpausing the game.

Now I wanted to work on the pause menu, and started with having it be drawn on screen via the ThirdPersonBlueprint. I used the Tab button for this, because I personally am comfortable with pressing this button. I then used an IsValid node to check whether the target object (being the pause menu) was already on screen or not, which was done by promoting the pause menu to a variable (explained in next image). If this was not on screen already, the input would draw the pause menu via the Create Widget node.

Once the pause menu had been drawn, a few settings needed to be changed. The first thing I did was create a reference to the pause menu, which I did by right-clicking on the object slot from the Create Widget node, as it would reference the selected class (my pause menu widget). This meant I could reference it in other sections of the code such as in the previous image. I then enabled the mouse cursor with a Set Show Mouse Cursor boolean, which I set to true. I then set the Input mode to UI only and then set the game to paused, the reverse of earlier to resume the game. This section of code worked to correctly pause the game with no apparent flaws.

Some minor fixes:

After doing a thorough test of my game at this point, I had run into a couple issues. The first was that the main menu did not have the cursor enabled by default. This is something I had missed because I had previously been previewing the game from the viewport, but this time I had launched the game fully to test it further. Previewing the game in the viewport usually enables the cursor by default, which is how I missed this the first time, so I quickly added the relevant nodes in the Pre-Construct of the main menu widget to solve this.

Another fault I had noticed was one that had been persisting for a while in my prior tests, but I thought of it as a glitch. However I later discovered that this is a problem with my settings for the level sequence I had created. I have mentioned earlier that I had set my animation in the sequence to 'Keep State' so the door would remain open, but upon starting the level again, the door would have stayed open unless the sequence had been refreshed. This was because the Restore state in the level sequence details (when clicking on it in the content browser) is unticked by default. I was able to fix this issue by ticking the box so the problem would no longer persist.

Finally, I had made a few changes to the healh bar HUD I had created earlier to improve it's appearance, by adding a bit of text to say health as well as a black bar across the top of the screen to make it easily visible. I set the black bar to a lower opacity to help not blocking the view of the user so much. In addition, in the screenshot above you can see some additional code, which I used to update a new text box in the UI to display the current level the player was on. This would not serve any purpose as there was only one level in my prototype, but this was something I wanted to demonstrate functionality for. I did this by first getting the current level, and then converting this into a string. I also removed the text 'level' from the level name 'Level1' so I would simply get the number, so I could have the level number displayed in a more user-friendly way.

this was the result. I had also coloured the level a different colour to differentiate it a bit. Finally, I had also added another text box next to the health bar to display the exact number of health to the nearest integer value.

Sound implementation:

For the sounds of my game prototype, I would be sourcing completely unedited but free-to-use sound assets, referenced at the bottom of this blog. I wanted to implement sounds for the following:

- User interface - buttons pressed

- Footsteps of character/enemy

- Door opening sound effect

- Attacking sounds

- Key collected sound cue

I wanted these sound effects because they would be very useful audio cues to the player, as you can communicate a message by simply playing a sound that sounds like a good sound. Other sounds such as footsteps would also help with immersion and general gameplay.

Interface sounds:

For my main and pause menu sounds, I wanted to have a simple sound effect when a button was pressed. I was able to source a suitable sound, as well as a few other options for free online (Kenney, 2020). These were very easy to add, as I simply needed to select the sound effect (which I had imported into a custom folder) and do this for every button in the user interfaces I had created.

Footsteps:

For the footsteps of the character, I again used the same source for free assets to use. One thing I wanted was for the footsteps to be slight variations of the sound effect, so they would not sound so repetitive. I was planning to do this from a Sound Cue.

After creating a Sound Cue, I could import my sound effects in if I wanted to like displayed above, by adding notifiers to the animations individually. However this would not add variety, as this would always play the same preset sound effect each time. This not only is very limited and basic, but it also makes it harder to edit for future, perhaps on unique terrain such as a metal floor which would not sound the same as the dirt floor of my map.

This is where the sound cue comes into play, where I first attached all of the five variations of the sound of foot steps. I then hooked these up to a random node, which will randomly play one of these sound effects when called, which I then hooked into the output node at the end.

Now this was complete, I could replace the notifiers of individual sound effects from earlier with the sound cue file I had just created. I also needed to check the Follow option so the sound effects would follow the character correctly.

However, upon testing and reviewing my work, I immediately noticed an issue, and this was that the foot steps of the enemy character were at full volume from any distance, as I had forgotten to include Attenuation, which is to do with sounds becoming quieter from further away. To fix this issue, I first needed to create an Attenuation file which I then hooked into my existing Sound Cue file, and attached the Attenuation file.

Once done, I was then able to edit this file and adjust the settings. I reduced the values to what seemed like an appropriate range, which I could preview with a guide shown in the viewport too. Once I was happy, I saved the settings, and upon testing this had worked.

Game world sound effects: